PCflanks might be a scam, as they say only Outpost and Tiny passed ( I suspect they are endorsing these).

Funny thing is they haven't included major firewall vendors. Tiny is very good firewall, been using it in few years ago, still....

As for question about methodology used in

matousec tests:

Methodology and rules

The tested firewalls are installed on Windows XP Service Pack 3 with Internet Explorer 6.0 set as the default browser. The products are configured to their highest usable security settings and tested with this configuration only. We define the highest security settings as settings that the user is able to set without advanced knowledge of the operating system. This means that the user, with the skills and knowledge we assume, is able to go through all forms of the graphic user interface of the product and enable or disable or choose among several therein given options, but is not able to think out names of devices, directories, files, registry entries etc. to add to some table of protected objects manually.

There are several testing levels in Firewall Challenge. Each level contains a selected set of tests and it also contains a score limit that is necessary to pass this level. All products are tested with the level 1 set of tests. Those products that reach the score limit of level 1 and thus pass this level will be tested in level 2 and so on until they reach the highest level or until they fail a limit of some level.

Most of the tests are part of Security Software Testing Suite, which is a set of small tests that are all available with source codes. Using this open suite makes the testing transparent as much as possible. For each test the tested firewall can get a score between 0% and 100%. Many of the tests can be simply passed or failed only and so the firewall can get 0% or 100% score only. A few tests have two different levels of failure, so there is a possibility to get 50% score from them. The rest of the tests have their specific scoring mapped between 0% and 100%. It should be noted that the testing programs are not perfect and in many cases they use methods, that are not reliable on 100%, to recognize whether the tested system passes or failed the test. This means that it might happen that the testing program reports that the tested system passed the test even if it failed, this is called a false positive result. The official result of the test is always set by an experienced human tester in order to filter false results. The opposite situations of false negative results should be rare but are also eliminated by the tester.

To be able to make right decisions in disputable situations, we define the test types. Every test has some defined type. Tests of the same type always attempt to achieve the same goal. Here is a list of the defined types and their goals:

General bypassing test: These tests are designed to bypass the protection of the tested product generally, they do not target a specific component or feature. This is why they attempt to perform various privileged actions to verify that the protection was bypassed. These tests succeed if at least one of the privileged action succeeds. Like the termination tests, general bypassing tests can not be used without modifying the configuration file.

Leak-test: Leak-tests attempt to send data to the Internet server, this is called leaking. Most of the leak-tests from Security Software Testing Suite are configured to use a script on our website that logs leaks to our database by default. For such tests, you can use My leaks page to see whether the test was able to transmit the data. For leak-tests that do not use this script, we use a packet sniffer in unclear situations.

Performance test: Performance tests measure impacts of using the tested product on the system performance. The measured values provided by the tests on the system with the tested product installed are compared to the values measured on the clean machine. Every software affects the system performance at least a little bit. To give products a chance to score 100% in these tests, we usually define some level of tolerance here. This means that if the performance is affected only a bit, the product may score 100%.

Spying test: These tests attempt to spy on users' input or data. Keyloggers and packet sniffers are typical examples of spying tests. Every piece of the data they obtain is searched for a pattern, which is defined in the configuration file. These tests usually succeed if the given pattern has been found.

Termination test: These tests attempt to terminate or somehow damage processes, or their parts, of the tested product. The termination test usually succeeds if at least one of the target processes, or at least one of their parts, was terminated or damaged. All the termination tests from our suite must be configured properly using the configuration file before they can be used for tests.

Other: Tests that do not fit any of the previously defined types are of this type. These tests, for example, may check stability or reliability of the tested product.

Cheers,

Miki

, just uninstall ESS, install NOD32 (use your current license info for username/password) and Comodo Internet Security FREE (just Firewall option when asked what do you want to install, as it will also offer you to install Comodo AV, which is nowhere near NOD32). And enjoy

, just uninstall ESS, install NOD32 (use your current license info for username/password) and Comodo Internet Security FREE (just Firewall option when asked what do you want to install, as it will also offer you to install Comodo AV, which is nowhere near NOD32). And enjoy

. Either you have firewall or not. If this is true (that Suits are not good with this test), how come KIS (also a Suite) scored 89%, reaching level 9 of protection? :huh: I believe it's a lame excuse for a crappy performance.

. Either you have firewall or not. If this is true (that Suits are not good with this test), how come KIS (also a Suite) scored 89%, reaching level 9 of protection? :huh: I believe it's a lame excuse for a crappy performance.

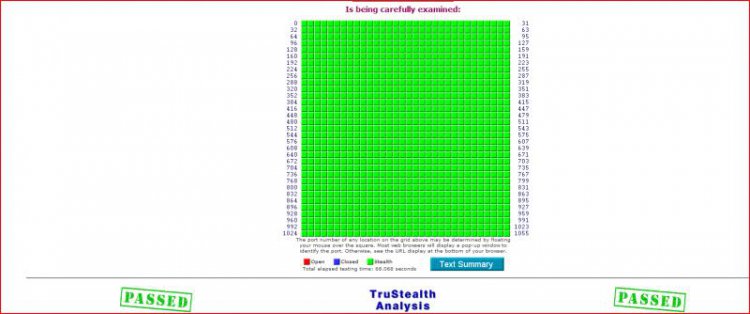

, and of course I also passed the GRC leaktest. PCflanks might be a scam, as they say only Outpost and Tiny passed ( I suspect they are endorsing these). See results below for both leak tests.

, and of course I also passed the GRC leaktest. PCflanks might be a scam, as they say only Outpost and Tiny passed ( I suspect they are endorsing these). See results below for both leak tests.